This post describes basics of

If you already know basics concepts, please refer to my post on

Server Sent Event - Development and Test Automation, which completely focused on E2E SSE development and addressing the challenges of testing SSE, preciously on how to automate SSE automation tests.

As always, you could refer to code here from my git hub account at BeTheCodeWithYou and refer to repository Spring-Boot-SSE

Please give star to the repo if you liked it :-)

Alright, so, with this post let's continue to talk on SSE basics

SSE Concept:-

Server Sent Events are the events ( data ) sent from server to the client over HTTP connection.

This connection is one directional connection from server to client. Meaning that, once the client connects to server, then there after server will send

any real-time notifications generated on the server side, to the client.

Client can be mobile app or browser based app, or any client that support HTTP Connection.

SSE use cases:-

you would have seen SSE use cases around you in day to day life

1) Continuous update about train time notifications on the display panel on the platform.

2) Continuous Rolling of Stock updates

3) Real time counter increment of your social media 'likes' icon.

and could be more...

How does SSE work:-

Client initiates a connection to server over http, this can be done by "client calling a rest end point on the server, in return, the response should have content-type header values as "text/event-stream".

This tells the client, that a connection is established and stream is opened for sending events from the server to the client.

In the browser you have a special object called, "EventSource", that handles the connection and converts the responses into events.

Message-Formats:-

SSE only supports text data. Meaning, server can only send text data to the client.

Binary streaming, while possible, is inefficient with SSE. In that case, WebSocket would be good choice for binary data transfer.

SSE code on Client side and server side:-

Client Side Code

EventSource object is the core object supported by browser.

To open a connection to the server, client will need to instantiate EventSource object.

const eventSource = new EventSource('http://localhost:8080/subscribe/');

Browser sends this GET request with accept header text/event-stream.

The response to this request, must contain header content-type with

value text/event-stream and response must be encoded with UTF-8.

To process these events in the browser an application needs to register a listener for the message event.

The property data of the event object contains the message

eventSource.onmessage = event => {

const msg = JSON.parse(event.data);

// access your attributes from the msg.

};

Client api supports certain events like open and error.

Open event occurs as soon as 200 response is received by client for /subscribe GET call.

error event is received by client, when there is any network error or server terminates the connection.

Server Side Code

Http Response to the above GET request on /subscribe end point must contain the Content-Type header with the value text/event-stream.

Spring Boot supports SSE by providing SseEmitter object. It was introduced in spring version 4.2 ( spring boot 1.3 ).

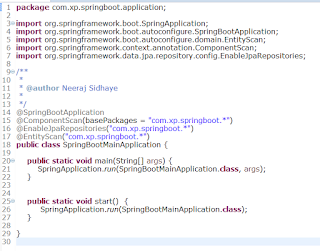

Create a spring boot application from start.spring.io and select web as dependency.

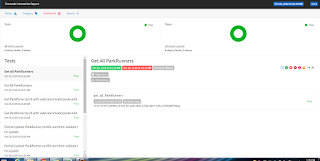

You can have a controller with rest end point GET with /subscribe allows client to establish connection.

Another rest end point POST with /event allows us to submit new events on the server.

This POST with /events or similar end point, can be called from any other server side component to send real time notification.

This /event end point, will then send event to connected clients.

Each client connection is represented with it's own instance of SseEmitter.

One limitation with spring SSE is , it does not give you tools to manage

these SseEmitter instances. So, for this example, I have used a list that stores SseEmitter objects and release objects on errors, completion or timeout scenarios.

SseEmitter object is created as below

SseEmitter emitter = new SseEmitter();

SseEmitter connection keep alive time:-

By default, Spring Boot with the embedded Tomcat server keeps the SSE HTTP connection open for 30 seconds.

we can override this 30 seconds via configurations.

spring.mvc.async.request-timeout=50000

this entry will keep the HTTP connection open for 50 seconds.

Alternatively, you can directly use SseEmitter constructor to pass this timeout value as below

SseEmitter emitter = new SseEmitter(150_000L);

//keep connection open for 150 seconds

Auto Re-connect mechanism

The nice thing about Server-Sent Events is that they have a built in re-connection feature. Meaning that, if the connection is dropped due to server error then client will automatically tries to re-connect after 3 seconds.

The browser tries to send reconnect requests forever until he gets a 200 HTTP response back.

It's a browser feature to wait for 3 seconds and then automatically reconnect.

This 3 seconds of time can be changed by the server by sending a new time value in the retry header attribute together with the message.

A client can be told to stop reconnecting using the HTTP 204 No Content response code.

Hope you liked this basic concept walk through of SSE.

Please follow reading on the next post on Server Sent Event - Development and Test Automation

- SSE concepts,

- SSE use cases,

- How does SSE work,

- Message Formats,

- SSE code on Client side and Server side

- SseEmitter connection keep alive time,

- Auto Re-connect mechanism

If you already know basics concepts, please refer to my post on

Server Sent Event - Development and Test Automation, which completely focused on E2E SSE development and addressing the challenges of testing SSE, preciously on how to automate SSE automation tests.

As always, you could refer to code here from my git hub account at BeTheCodeWithYou and refer to repository Spring-Boot-SSE

Please give star to the repo if you liked it :-)

Alright, so, with this post let's continue to talk on SSE basics

SSE Concept:-

Server Sent Events are the events ( data ) sent from server to the client over HTTP connection.

This connection is one directional connection from server to client. Meaning that, once the client connects to server, then there after server will send

any real-time notifications generated on the server side, to the client.

Client can be mobile app or browser based app, or any client that support HTTP Connection.

SSE use cases:-

you would have seen SSE use cases around you in day to day life

1) Continuous update about train time notifications on the display panel on the platform.

2) Continuous Rolling of Stock updates

3) Real time counter increment of your social media 'likes' icon.

and could be more...

How does SSE work:-

Client initiates a connection to server over http, this can be done by "client calling a rest end point on the server, in return, the response should have content-type header values as "text/event-stream".

This tells the client, that a connection is established and stream is opened for sending events from the server to the client.

In the browser you have a special object called, "EventSource", that handles the connection and converts the responses into events.

Message-Formats:-

SSE only supports text data. Meaning, server can only send text data to the client.

Binary streaming, while possible, is inefficient with SSE. In that case, WebSocket would be good choice for binary data transfer.

SSE code on Client side and server side:-

Client Side Code

EventSource object is the core object supported by browser.

To open a connection to the server, client will need to instantiate EventSource object.

const eventSource = new EventSource('http://localhost:8080/subscribe/');

Browser sends this GET request with accept header text/event-stream.

The response to this request, must contain header content-type with

value text/event-stream and response must be encoded with UTF-8.

To process these events in the browser an application needs to register a listener for the message event.

The property data of the event object contains the message

eventSource.onmessage = event => {

const msg = JSON.parse(event.data);

// access your attributes from the msg.

};

Client api supports certain events like open and error.

Open event occurs as soon as 200 response is received by client for /subscribe GET call.

error event is received by client, when there is any network error or server terminates the connection.

Server Side Code

Http Response to the above GET request on /subscribe end point must contain the Content-Type header with the value text/event-stream.

Spring Boot supports SSE by providing SseEmitter object. It was introduced in spring version 4.2 ( spring boot 1.3 ).

Create a spring boot application from start.spring.io and select web as dependency.

You can have a controller with rest end point GET with /subscribe allows client to establish connection.

Another rest end point POST with /event allows us to submit new events on the server.

This POST with /events or similar end point, can be called from any other server side component to send real time notification.

This /event end point, will then send event to connected clients.

Each client connection is represented with it's own instance of SseEmitter.

One limitation with spring SSE is , it does not give you tools to manage

these SseEmitter instances. So, for this example, I have used a list that stores SseEmitter objects and release objects on errors, completion or timeout scenarios.

SseEmitter object is created as below

SseEmitter emitter = new SseEmitter();

SseEmitter connection keep alive time:-

By default, Spring Boot with the embedded Tomcat server keeps the SSE HTTP connection open for 30 seconds.

we can override this 30 seconds via configurations.

spring.mvc.async.request-timeout=50000

this entry will keep the HTTP connection open for 50 seconds.

Alternatively, you can directly use SseEmitter constructor to pass this timeout value as below

SseEmitter emitter = new SseEmitter(150_000L);

//keep connection open for 150 seconds

Auto Re-connect mechanism

The nice thing about Server-Sent Events is that they have a built in re-connection feature. Meaning that, if the connection is dropped due to server error then client will automatically tries to re-connect after 3 seconds.

The browser tries to send reconnect requests forever until he gets a 200 HTTP response back.

It's a browser feature to wait for 3 seconds and then automatically reconnect.

This 3 seconds of time can be changed by the server by sending a new time value in the retry header attribute together with the message.

A client can be told to stop reconnecting using the HTTP 204 No Content response code.

Hope you liked this basic concept walk through of SSE.

Please follow reading on the next post on Server Sent Event - Development and Test Automation